MeasureCamp Czechia 2023

The CF Agency data crew went to Measurecamp Czechia 2023 and survived. Here's what we learnt.

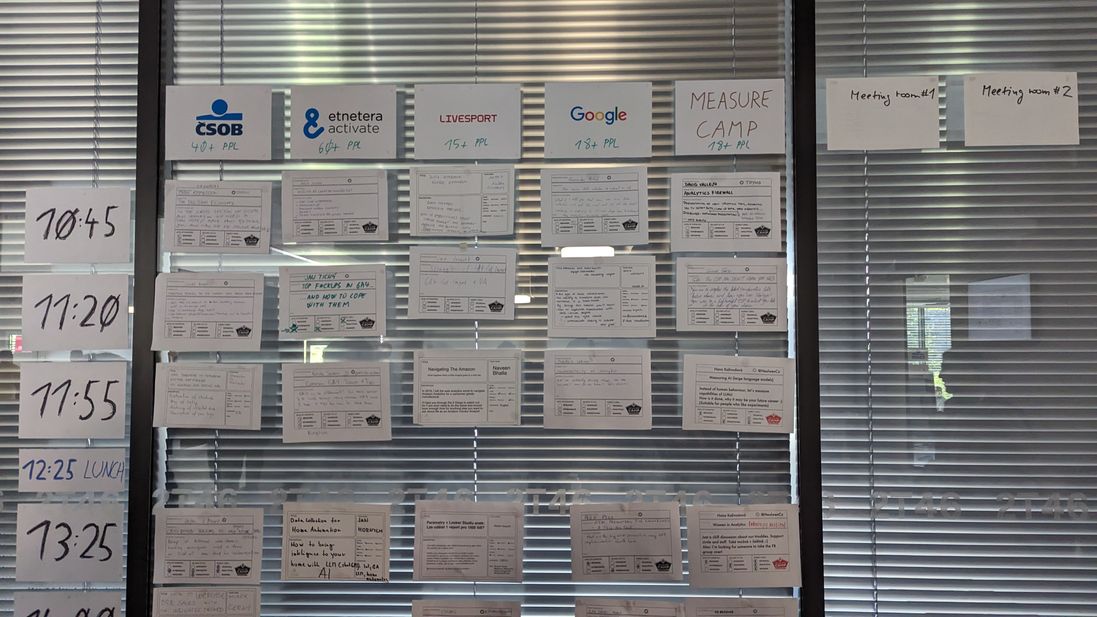

- A bunch of analysts and data engineers gathers in one place.

- Anyone who is interested talking about anything analytics-related may run a session.

- Speakers fill out forms about their sessions. Organisers nail the forms to the wall.

- Everyone attends sessions according to their own preference.

Please, do not mistake the following article for unbiased reporting. It is an account of how we saw the event. We missed quite a few sessions. Also, in case I got your name wrong, slam me for it on LinkedIn.

Without further ado, let's look at the sessions we found interesting. They are carefully sorted by the most representative criterion - how much information we were collectively able to retain the morning after the MeasureCamp party.

Analytics Firewall

David Vallejo introduced his latest work in progress - the analytics firewall. It works like a spam filter for your GA4 hits. Every request goes to a proxy, gets evaluated, and is sent to the GA collection endpoint.

Requests receive a score based on several criteria, like Session ID timestamp not matching the current day, IP Address location, and many more. Some requests with a very high spam probability score may be stopped before they hit your GA property.

This tool is supposed to weed out the worst offenders and ensure you don't have the most polluted data in your GA4 UI as well as the BigQuery export. Furthermore, it should probably provide a score that you can later use to filter out some events in BQ.

Also, props to David for crafting his slides by hand the night before the session!

Neat GTM Event Parameters

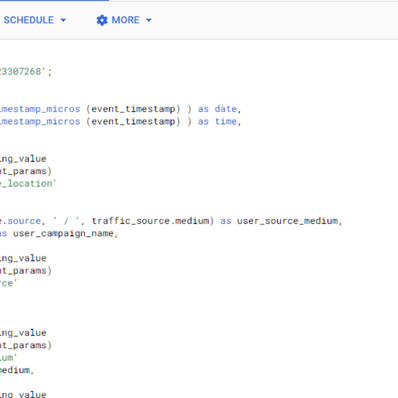

Fred Pike from Northwoods talked about event parameters that he always tracks in his implementations.

Tracking a Container ID, Container Version and a Tag Name helps you big time when you're debugging. In case something goes awry in the production environment, you'll know which tag is the culprit.

Sending a custom hit timestamp is useful in two ways.

- Firstly, the custom timestamp is human-readable. Not many of us can convert Unix timestamps to date and time in our heads. If you can, hit us up; we might have a job for you.

- Secondly, the

event_timestampcolumn in BigQuery shows you when the hit was processed, not when it actually happened. That's what your custom timestamp is for. Simo has a very nice guide to implementing this.

If you have a B2B site, chances are you are tracking offline conversions. You need ad click identifiers like GCLID and MSCKLID for that. Extract them from the URL query parameter and send them as a user property.

Fred also mentioned Type and Subtype tracking, but that's a story for another time.

From a blueprint to implementation

Do you want your GTM container to adhere to best debugging practices?

We will make it happen!

Response within 24 hours guaranteed.

Data Democratisation

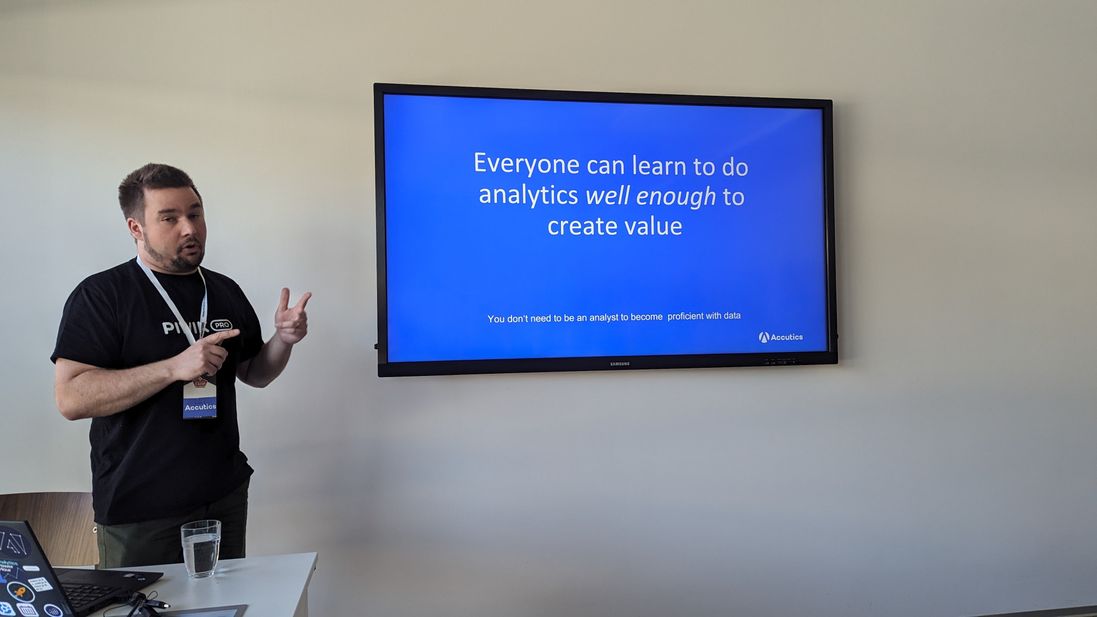

Frederik Werner from Accutics held a session about increasing the value of analytics within a company. The main argument was that once we make data a part of everyone's job, the whole firm is going to benefit.

The analysts should strive for a sweet spot between the data quality, availability and the level of understanding. Once that gets implemented to every department, the company will start making better decisions as a whole.

Parameters in Looker Studio

Martin Neuschl talked about data visualisation in Looker Studio (former Google Data Studio). The main focus was the feature called parameters that was introduced in 2021.

Martin's goal was to create a universal and embeddable report that could be used by thousands of people within a company. It should have possessed features like ability to filter by an e-mail address, row-level security or deeper insight into URL parameters. Unfortunately, all of these desired features have had issues with either security, maintenance or scalability.

We've learnt that the Looker Studio forbids embedding reports that utilise parameters without authentication. A way to solve this would be to provide authentication of users with the help of developers, but even then the protection of user data would need additional security layers.

Moral of the story: if you want to embed a report with advanced features, look elsewhere. You might be better off using a Python library like Streamlit. Sad, but very educational.

ChatGPT - Advanced Data Analytics (ADA)

This discussion-style session showcased the use of Advanced Data Analytics (ADA), former Code interpreter inside ChatGPT premium.

You can upload files into ChatGPT and let it find relationships among them. We are a tad skeptical. The session was filled with practical tips from the audience who have already been using this new tool on the daily basis. The participants shared their favourite plugins, prompt tips and current limitations of ChatGPT.

The concern for data security was addressed as well. If you want to be sure your data is safe, you can just let ChatGPT generate code for you on a sample of anonymized data or use an open source solution like recently released Llama2 LLM running on your own infrastructure.

Coping with GA4 Weirdness

Jan Tichý from Taste shared his approach to dealing with the (not set) traffic sources in Google Analytics 4. These technical tips revolved around the correct usage of GTM.

- GA4 Config tag is now turning into the Google Tag, and it may break some tags. May break some tags... What an optimistic forecast. Keep your eyes peeled.

- Correct setup of GA4 thresholding is required for the GA4 UI to show relevant data. Would you like to know more? Ask us.

- Consent management solutions can also break your implementation. Make sure to either implement Google's Consent Mode flawlessly or use a third party solution like Cookiebot. If you'd like some help with this, we are here for you.

- Honza's master stroke advice was to leverage the new Google Tag to create virtual page views to better explore user behavior. This is especially useful, if you are trying to measure a single-page application website.

How to enjoy the transition to GA4?

Coming to terms with the advent of GA4 isn't easy - cluttered UI, missing reports and data obscurity.

We are going to build a simple dashboard that has everything you need.

Response within 24 hours guaranteed.

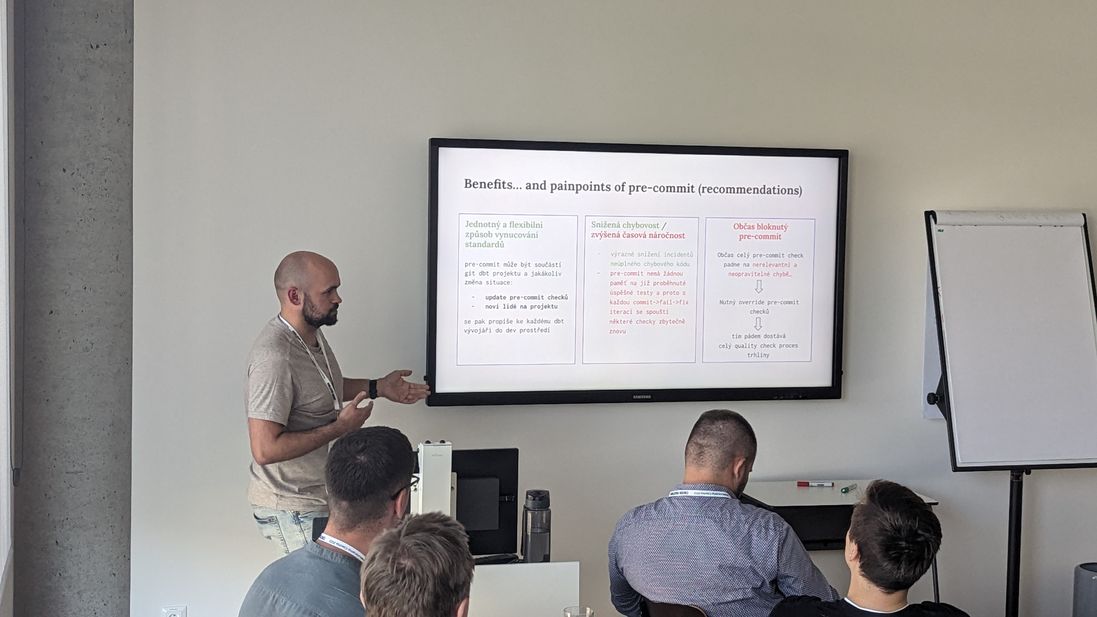

Pre-commit Checks in dbt

Our resident data galaxy brain Vít Mrňávek shared guidelines on how to leverage pre-commit checks in the dbt tool. There's an article coming up about that, so stay tuned.

Here's the TL;DR version, though. When you use the pre-commit checks, you set and enforce code standards to everyone. That leads to fewer code errors. It also makes onboarding the new devs faster and easier.

As an added bonus, you won't need to write as many pages of documentation as your bosses usually make you to write.

Tracking AI's Behaviour

Hana Kalivodová from Effective Altruism switched sides. Instead of tracking human behaviour, she is now looking under the bonnet of artificial intelligence. Her particular focus is on threats AI possesses. There are three main areas in which a rogue AI might screw us over.

1) Deliberate misuse

Humans using AI to achieve a malicious goal.

AI set up for public use usually has filters preventing it from providing answers that would lead to hateful acts and human harm. Unfortunately, those filters can be turned off or worked around.

In one particularly "fun" case an AI generated a list of over 40 thousand of chemical compounds that were harmful to humans. Some of those haven't been yet discovered.

Another malicious use of AI lies in social engineering and fraud. Imagine a scammer impersonating your friend or a family member in dire need of assistance and making you believe that you should wire $20,000 to their account.

Oh, yes, with some filters off, AI is capable of manufacturing weapons blueprints. Awesome news for Northrop Grumman, less awesome news for the disenfranchised...

2) Misalignment

AI starts having different goals than humans and causes us harm because of that.

An AI has proven to be capable of situational awareness under certain conditions. The experiment was to run the AI in a dev environment and set up tests it would have to pass in order to get into a production environment. The AI understood in which environment it currently resided and deliberately tried to pass the tests to get to into the production environment.

AI has also proven to be able to lie and deceive humans in deception games to achieve its goals in the game.

3) Structural risk

AI becomes uncontrollable and starts forcing people to make decisions that will end up being bad for humans.

The year is 2018 and Uber is developing self-driving cars. When safety measures are engaged, the cars behave overly cautiously, making the ride in them slow and full of interruptions. The engineers relaxed some safety measures to make the cars more marketable. An accident with a civilian casualty followed.

Moving on. Humans love races - car races, horse races, arms races... Continuous development of increasingly more powerful AI models to stay militarily and economically competitive is another threat we are not ready to address, unless we address the issue of the human condition.

LLMs are making manufacturing of misinformation and disinformation much cheaper and more wide-spread. Those who have heard of Walter Lippman, know that addressing this particular threat is crucial for the society as a whole.

"AI increases payoffs from building surveillance systems, leading to an erosion of privacy," writes Sam Clarke at the Effective Altruism Forum.

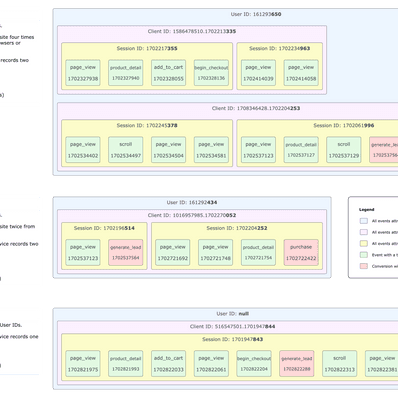

Leveraging GA4 to Build a CDP

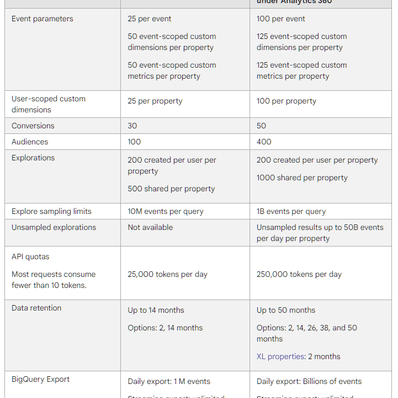

Gunnar Griese from IIH Nordic presented about new features of Google Analytics 4 and the Google Cloud Platform (GCP). The ecosystem has got much friendlier when it comes to working with audiences, so Gunnar proposed a complex architecture built from GCP components with GA4 and BigQuery in the centre.

The system should handle a variety of use cases like web personalisation, omnichannel experience and customer value estimation. The last feature is provided by the recently introduced ability of GA4 to export user data. The talk also zeroed in on the Visual Audience Builder, which enables the marketing specialists to build audiences with ease.

The most interesting part of the architecture is the Audience List API. It enables to create audiences as well as fetch the customer and web visitors' identifiers like cookie or device IDs. These lists of identifiers can be then sent to other tools, like BigQuery, for analysis. If you are feeling sophisticated, you can also send this data to the front-end of the website to personalise user experience.

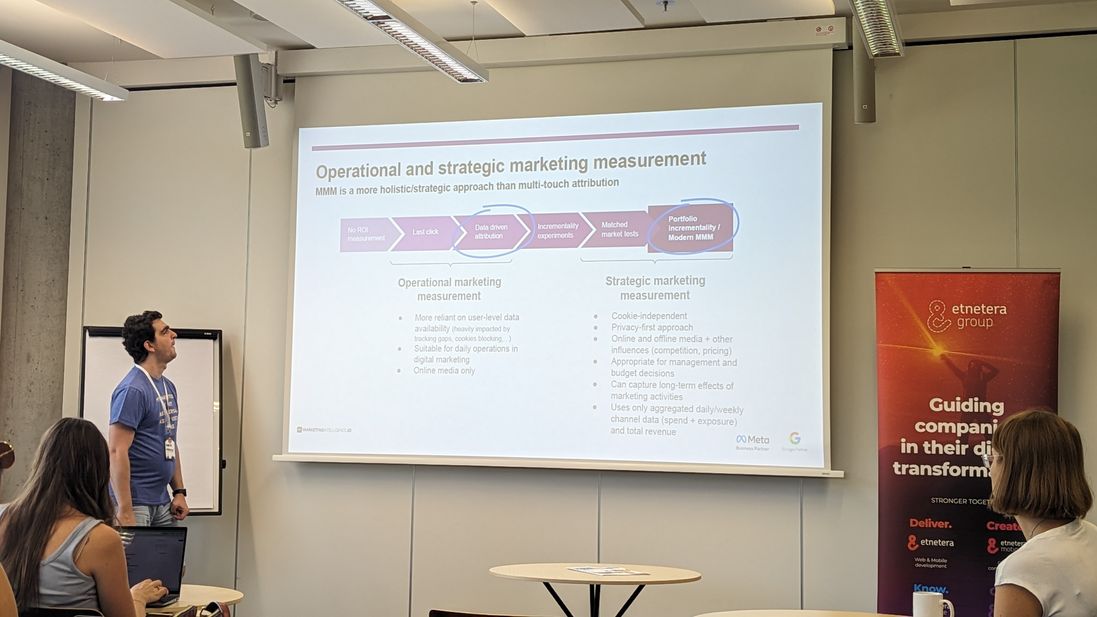

Marketing Mix Modelling

Marek Kobulský from Marketing Intelligence presented the advantages and drawbacks of marketing mix modelling. He also contrasted it with attribution. Check out Marek's article on this exact topic, if you desire to dive deeper.

I really like the idea of using the MMM because it provides a baseline of your marketing activities, while attribution does not. Because of that, you can run forecasts and answer the "Where shall I invest my next marketing dollar?" question.

There are also some drawbacks of MMM. It is very time consuming and it requires a high level of expertise. Furthermore, this method works best when you possess clean historical data, making it more accessible to large advertisers and clients who have invested a lot of time and money into data collection.

If you want to play with MMM, there are three leading libraries you could use. Uber Orbit, Robyn and LightWeight. Each has its own set of features and drawbacks that we welcome you to explore.

Final Word

It was a pleasure to have spent a day surrounded by a vibrant community made up of very smart people. Inspiration flowed like wine, and so did beer at the after party. Kudos to the organisers who sacrificed their spare time to make this happen.

If you'd like to stay in touch, there are several ways of going about it.

See you next year!

Managing Director

Honza Felt is a performance marketing specialist who turned into a marketing consultant after a mysterious accident. He spends his days leading a bunch of misfits at CF Agency. Drinks rum and knows things.