How to Check GA4 Daily Export Limit to BigQuery

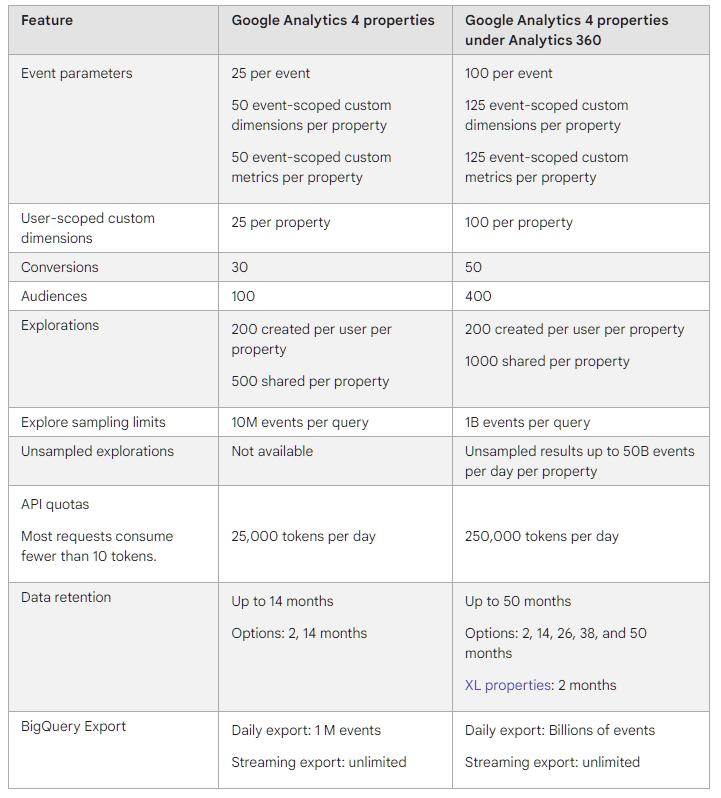

Google launched Analytics 360. Along with that came limits imposed on the free version of GA4. Monitoring the limits of event parameters and conversions is easy, but what if you want to track your daily exports to BigQuery? Standard GA reports won't cut it.

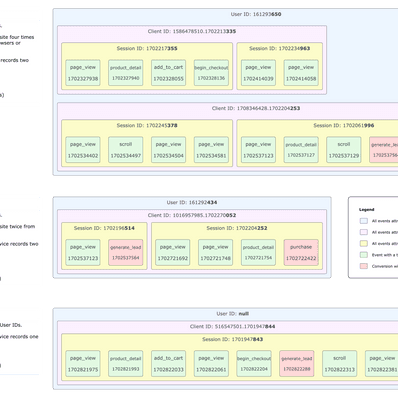

When you look at the image above, there are only a few features to watch out for. The most important one is the very last one - daily exports to BQ.

Find out how close you are to hitting that daily limit of one million events using these two queries.

Use Case for Small Datasets

This query counts all events in a day and applies simple logic to the result. If the amount of exported events in a day is over the limit, the query returns "over the limit".

Should the amount of exported events falls in the 95th, 75th or 50th percentile, the query returns the percentile range.

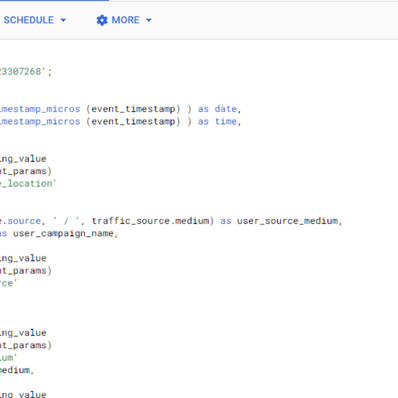

Use the following query only if running it would not exceed 10 MB of the BQ capacity. You can see the processing capacity estimate in the top right corner of the BQ user interface.

![]()

^^ Use this query.

![]()

^^ Do not use this query.

Query Modification

Before you copy-paste the code, let's briefly go over of what you need to customise in order for the query to work.

- Line 5: change your source table

- Line 7: change the starting and ending date

- Lines 24 and 25: comment out the line 24 and comment in the line 25 to see only days when everything is all right.

with ga4_data as (

select

format_date( '%Y-%m-%d', extract (date from timestamp_micros (event_timestamp) ) ) as date,

count (event_name) as event_count

from `projectId.analytics_000000000.events_*` -- change to your source table

where

_table_suffix between '20210901' and '20210930' -- change to your desired date range

group by 1

),

logic as (

select

date,

event_count,

case

when event_count > 1000000 then 'over the limit'

when event_count between 950000 and 1000000 then '95% of the limit'

when event_count between 750000 and 949999 then '75%-94.9% of the limit'

when event_count between 500000 and 749999 then '50%-74.9% of the limit'

else 'all good'

end as limit_status

from ga4_data

)

select * from logic

where limit_status in ('over the limit', '95% of the limit', '75%-94.9% of the limit', '50%-74.9% of the limit')

-- where limit_status = 'all good'

order by date descUse Case for Large Datasets

This query does exactly the same thing the previous one does. Well, almost exactly...

Instead of counting all the rows in the GA4 events table, we query information schema. The benefit of doing this is saving processing capacity. Schema queries consume only 10 MB per use, regardless of how large the table is.

Use the following query only if running the previous one for small datasets would exceed 10 MB of the BQ capacity.

SELECT

PARSE_DATE("%Y%m%d", RIGHT(table_name, 8)) as date,

total_rows,

-- total_logical_bytes,

-- total_billable_bytes,

-- last_modified_time,

-- storage_tier,

case

when total_rows > 1000000 then 'over the limit'

when total_rows between 950000 and 1000000 then '95% of the limit'

when total_rows between 750000 and 949999 then '75%-94.9% of the limit'

when total_rows between 500000 and 749999 then '50%-74.9% of the limit'

else 'all good'

end as limit_status

FROM `projectId.analytics_123456789.INFORMATION_SCHEMA.PARTITIONS`

WHERE table_name NOT LIKE 'events_intraday%'

ORDER BY date DESC;Analysis

What can you actually infer from the results of this check? That depends on your data.

A Few Anomalies

If you have only a couple of days over the limit, find out what happened then. Too many users from acquisition campaigns? Off-line campaigns influencing direct sessions growth? These queries will help you find outliers, so you can do something about them.

Consistent Overages

In case you are consistently over the limit, consider sending fewer events to the GA4 property. For example, sending Core Web Vitals events might be pushing you over the edge.

Flawed user flow or bad web architecture are also possibilities. Users may be hindered in getting to do what they want to do on your site. I'd recommend coming up with a hypothesis and testing it. This query about user flow analysis will be of great help.

You might also genuinely be too big for the free tier of GA4.

Either way, if you get into trouble with GA4 or BigQuery, drop us a line!

This is nothing

We build sophisticated data models that cover

all sorts of reporting needs. Maybe even yours!

Response within 24 hours guaranteed.

Data Engineer

Jiří makes data engineering look easy. His long experience with building ETL pipelines comes in handy when he writes about BigQuery and automation processes.